It’s the final week of DALMOOC: On the one hand I am glad that from now on I’ve got time for my other hobbies again (my fish tank needs my attention), on the other hand I look back on nine weeks of really interesting and challenging MOOC activities – again a sincere thanks to all of you! My feedback to course structure and my learning can be found in many of my blog posts, especially week7.

1. DALMOOC CMAP

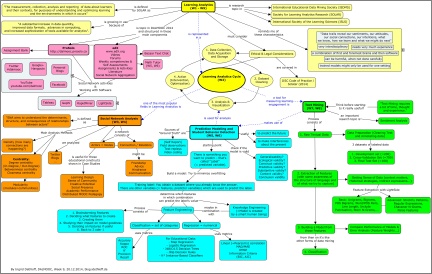

Creating a DALMOOC cmap is a very useful task, reminded me of my Master Studies some years ago, where we did that a lot in our learning group. I could have worked a few more weeks on that (already spent many hours and some evenings with it) – Normally I would have created a cmap for each of the 4 main units in DALMOOC and another one for the course structure, so as a result, there is much content in my cmap. The cmap includes my understanding of what was important and what I would like to keep in mind – I hope I got it right and there aren’t too many mistakes in it. Data source and method: I reread my blog posts and copied contents and keywords in the cmap (no, I didn’t do text mining for that…). There are some things I didn’t cover in the map, for instance, ProSolo functions (sorry, it doesn’t mean that I didn’t like ProSolo), learning analytics software, more detailed connections between the units, …

I think, others might be scared or irritated by the amount/mass of information, but I tried to use colours to make it a little bit easier and to show the original course units. Hopefully, I can use the cmap for my job should the topic arise (at the moment I’ve got my hands full with a lot of other elearning related topics).

Download DALMOOC CMAP [jpg]

Download DALMOOC CMAP [pdf]

Download DALMOOC CMAP [cmap]

2. Learning Analytics in Germany

Based on competency 9.1, I thought it would be a good idea to collect some links about Learning Analytics in Germany. I didn’t realize at the time that so many conferences and working groups covered the topic „Learning Anaytics“ in their program this year (I’ve attended some of the conferences…).

LA in general

- Article in the (most) important German elearning web portal eteaching.org: http://www.e-teaching.org/didaktik/qualitaet/learning_analytics/ & opinion pro/contra: http://www.e-teaching.org/community/meinung/pro_con_learning_analytics

- Topic in one of the first German MOOCs „Open Course 2012 – Trends in E-Teaching“: http://opco12.de/4-15-juni-2012-learning-analytics/

- Special interest group „Gesellschaft für Informatik, Fachgruppe E-Learning, Arbeitskreis Learning Analytics“: http://fg-elearning.gi.de/fachgruppe-e-learning/arbeitskreise/

- Often-cited project/tool „LeMo“: http://www.lemo-projekt.de/publikationen/

LA as conference topic in Germany 2014 (selection)

- LEARNTEC conference 2014 (Karlsruhe), 4.2.2014: http://www.learntec.de/messe-karlsruhe-learntec/2014/media/data/diverses/LT14-NUR_KONGRESSPROGRAMME_–_WEB-Version_21012014.pdf

- Working group „DINI E-Learning“, DINI Zukunftswerkstatt Learning Analytics (Fulda), 17.6-18.6.2014: http://dini.de/veranstaltungen/workshops/zukunftswerkstatt2014/programm/ and Results: http://dini.de/fileadmin/workshops/zukunftswerkstatt2014/2014-06_Fotoprotokoll_DINI_Zukunftswerkstatt.pdf

- DeLFI conference 2014 LA Workshops proceedings (Freiburg), 15.9.2014: http://ceur-ws.org/Vol-1227/

- Working group ZKI AK E-Learning Meeting (Kaiserslautern), 22.9.2014: https://www.rhrk.uni-kl.de/en/news/zki2014/arbeitskreise/ak-e-learning/

- Talk at Campus Innovation conference (Hamburg), 20.11.2014: https://lecture2go.uni-hamburg.de/veranstaltungen/-/v/16918

- Online Educa conference (Berlin), 3.-5.12.2014: http://www.online-educa.com/programme

- „Kompetenznetz E-learning Hessen„, Fachforum “Learning Analytics mit Moodle“ (Fulda), 3.12.2014: http://www.gmw-online.de/2014/11/fachforum-learning-analytics-mit-moodle-am-3-12-2014-an-der-hochschulde-fulda/

- (upcoming) LEARNTEC conference 2015 (Karlsruhe), 27-28.1.2015: http://www.learntec.de/media/data/kongress_2/LEARNTEC15_Kongressprogramm_Vortragsprogramm.pdf

Important international societies that are doing research in Learning Analytics are:

- International Educational Data Mining Society (IEDMS)

- Society for Learning Analytics Research (SOLAR)

- International Society of the Learning Sciences (ISLS)

(Update 31.12.14)

And that’s my certificate: