Dieser Artikel beschreibt meinen ersten Eindruck von der „Oculus Quest“ – vorige VR-Nutzungen fanden per Smartphone (Google Cardboard, Samsung Gear VR) oder Standalone, ohne Gaming-PC (Oculus Go) statt.

Mein Thema ist ja der niederschwellige Zugang zu VR & AR – Durch die Oculus Quest ist eine nächste beachtliche Stufe für VR erreicht. Mit 449 Euro zwar in etwa doppelt so teuer wie die Oculus Go, machen hier die kabellosen 6 DoF (also nicht nur Orientierungs- sondern auch Positions-Tracking) den wirklich großen Unterschied.

Im ausgesprochen gut gemachten Quest-Tutorial „First Steps“ werden die (im Vergleich zu 3 DoF neuen) Möglichkeiten sichtbar: Das Greifen und Bewegen von Objekten mittels der 2 Hand-Controller und Armbewegungen machen großen Spaß. Dem ganz banalen Papierflieger kann man lange zuschauen, wie er seine Runden dreht und nicht nur der Ball/Tischtennisschläger funktionieren vom Inside-Out-Tracking (Tracking-Algorithmus „Oculus Insight“) her ausgesprochen gut. Beim Starten der Quest wird eine Guardian-Zone definiert, innerhalb derer man seinen Platz definiert, in dem man sich räumlich gut bewegen kann und zum Glück merkt sich die Brille diese Koordinaten, so dass ich am nächsten Tag im gleichen Zimmer (Küche mit Kachelboden und viel Tageslicht) einfach weitermachen konnte.

Die Demo-Version von „BEAT SABER“ ist (im Vergleich zu den generell auf Samsung Gear VR / Oculus GO möglichen Anwendungen) ein Riesenunterschied und wie schon in den Vorab-Kritiken zu lesen war, auf der Oculus Quest eine durchaus sportliche und viel Spaß machende Anwendung und auch vom eingebauten Sound her völlig o.k.

Erstaunlich finde ich, wie viele Leute sich offensichtlich die Quest gekauft haben, obwohl sie schon über die dank Gaming-PC-Anschluss höhere Technik von Oculus Rift / htc Vive verfügen: Klar ist ein kabelloses Vergnügen toll, aber da muss man sich dann auch nicht wundern, dass das Mitschauen (kaum) möglich ist. Was sofort funktioniert, ist die Kopplung von Oculus-App des Smartphones (welches zum Ersteinrichten verwendet wurde) mit der Quest, so dass man immerhin auf dem Smartphone schauen kann, was derjenige, der gerade die Oculus Quest benutzt, sieht. Leider geht das z.B. bei „BEAT SABER“ nicht, aber zumindest das Tutorial „First Steps“, s.u. Foto, kann man ansatzweise als Zuschauer mitverfolgen.

Dem ersten Eindruck nach sehr gut und eine erhebliche Qualitätssteigerung zu meinen bisherigen VR-Möglichkeiten ist das Display: meine (per USB-Kabel auf die Quest kopierten) 360-Grad-Bilder sehen ungleich besser aus als auf den bisherigen Oculus Go / Samsung Gear VR. Das kann am OLED-Display mit 72Hz und der Auflösung 1600×1440 pro Auge liegen. Viel besser auch beim Stichwort „Augenabstand einstellen“ – bei der Oculus Go gab es hier keinen Drehregler und die Scharfstellung konnte ich eigentlich nur dadurch regeln, dass ich die Go tiefer nach unten schob, was wiederum extrem unbequem war. Zunächst empfand ich auch die Oculus Quest als unbequem und schwer, aber das gab sich schnell, sobald ich in spannenden Anwendungen unterwegs war.

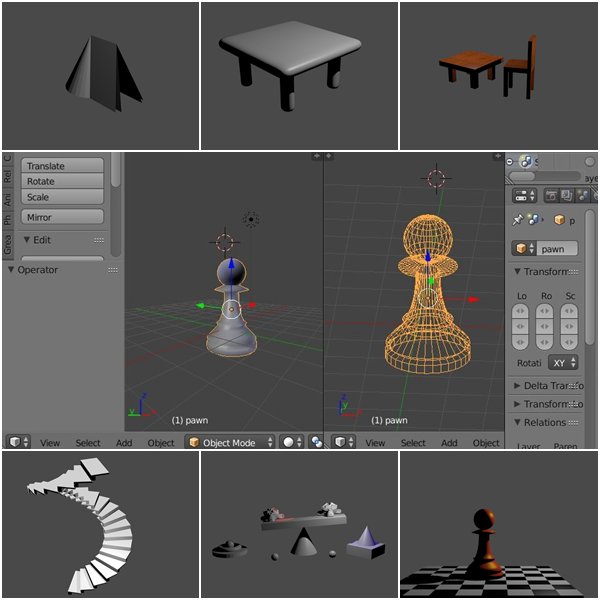

Die Quest setzt (derzeit) auf Gamer mit entsprechenden Anwendungen, d.h. hier werde ich um diverse Käufe im Bereich von ca. jeweils 20 Euro nicht herumkommen, um weiter zu testen – Ich bin schon sehr gespannt auf „Tilt Brush“ und „Job Simulator“. Was sich bzgl. Quest-Anwendungen künftig im Bereich „Education“ tun wird, muss sich noch zeigen.